For anyone who uses social media, there’s an eerie feeling of familiarity when a platform seems to know you better than anyone else. Recommended videos hitting the mark, ads responding to recent conversations, memories appearing at just the right moment – we attribute this “magic” to algorithms learning from our direct interactions. However, this is merely the tip of a much more complex system.

From Computer Vision to Semantic Interpretation

When we upload a photo online, not only do other users see it, but also computer vision systems like Google’s Vision API, which claims to “extract valuable insights from images, documents, and videos.” These technologies have evolved beyond identifying objects or faces; they now interpret semantics: deducing emotions, cultural contexts, and personality traits.

Tools like TheySeeYourPhotos, created by a former Google engineer to expose such practices, demonstrate this. The goal is to show how much personal and sensitive information can be inferred from a single photo using the same technology employed by big corporations.

Case Study: Inferred Profile from a Single Photo

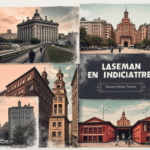

To explore the limits of this interpretative capability, an experiment was conducted at Miguel Hernández University. We analyzed a personal photo using the mentioned tool, categorizing the results into two levels.

- Descriptive analysis: The AI correctly identified the main scene (a young person near a railing and a monument) and approximated the geographical location. Although prone to factual errors (like slightly misestimating age), this level remains within expectations.

- Inferential analysis: This is where things get revealing and problematic. Based on the same image, the system constructed a detailed profile using statistical patterns and likely algorithmic biases:

- Ethnic origin (Mediterranean) and estimated income level ($25,000 to $35,000).

- Personality traits (calm, introverted) and interests (travel, fitness, food).

- Ideological and religious orientation (agnostic, Democratic Party follower).

The ultimate goal of this exhaustive profiling is commercial segmentation. The platform suggested specific advertisers (Duolingo, Airbnb) with a high likelihood of success based on the inferred profile. The significance isn’t accuracy but demonstrating that a single image can build a complex, processable individual identity for machines.

From Profiling to Influence: The Risk of Algorithmic Manipulation

If an algorithm can infer our ideology, is its purpose merely to offer us congruent content or reinforce that inclination to make us more predictable and profitable?

This is the blurred line between personalization and manipulation. For instance, Meta has experimented with AI-generated users designed to interact with lonely profiles and increase their platform time. If systems can simulate companionship, they can also create subtly guiding informational environments that shape opinions and decisions.

Moreover, there’s a lack of genuine control over our data. Meta’s record-breaking €1.2 billion fine in 2023 for illegal information transfers from Europe to the US proves that compliance becomes a risk-benefit calculation rather than an ethical principle for big tech.

Critical Awareness as a Defense Tool

The outcome of this mass profiling is the solidification of “filter bubbles,” a term coined by Eli Pariser to describe how algorithms confine us in informational environments reinforcing our beliefs. Each user then inhabits a tailored yet closed and polarized digital world.

Recognizing that each digital interaction fuels this cycle is the first step to mitigate its effects. Tools like TheySeeYourPhotos are valuable because they reveal how the illusion of personalization defining our online experience is constructed.

Therefore, the feed of our social networks doesn’t reflect reality but is an algorithmic construction designed for us. Understanding this is crucial to protect critical thinking and navigate an increasingly complex digital environment consciously.

- Q: What information can be inferred from a single photo using AI? A: Personal and sensitive information, including ethnic origin, income level, personality traits, interests, ideological orientation, and religious beliefs.

- Q: How do computer vision systems interpret photos? A: They go beyond identifying objects or faces to deduce emotions, cultural contexts, and personality traits.

- Q: What is the purpose of exhaustive profiling by algorithms? A: The ultimate goal is commercial segmentation, suggesting specific advertisers with a high likelihood of success based on the inferred profile.

- Q: What is the risk of algorithmic manipulation? A: The blurred line between personalization and manipulation can lead to subtly guiding informational environments that shape opinions and decisions, potentially confining users in closed, polarized digital worlds.

- Q: How can users protect themselves from algorithmic manipulation? A: Recognizing that each digital interaction fuels the cycle of algorithmic profiling is crucial. Tools like TheySeeYourPhotos can reveal how personalization is constructed, enabling users to navigate digital environments more consciously.